Apple’s Child Protection Features

Apple’s child protection features for iOS and iPadOS have been in the news lately. We’ve seen a lot of misinformation spreading on social media about how Apple is scanning photos, privacy related to user’s photos and about how Apple treats photos stored in iCloud. In this article, we explain what changes Apple will implement this fall and what those changes mean for you. Hopefully, we can dispel some common misunderstandings about the new initiatives.

I’m out of the loop. What did Apple announce?

On August 5th, Apple announced new child safety features called Expanded Protections for Children, which Apple will include with iOS 15 when it launches later this year. Apple designed the new measures to protect children online and stem the spread of child sexual abuse material. The program consists of three new tools:

- New child communications safety features in Messages.

- Enhanced detection of Child Sexual Abuse Material (CSAM) content in iCloud.

- Updated knowledge information for Siri and Search.

Is it true that Apple is scanning or looking at my photos? Unequivocally and flatly, no. Keep reading to find out how and why.

What are the new child communication safety features in Messages?

The Messages app will have new tools to alert children and their parents when a child’s device receives or sends sexually explicit photos. This new tool only applies to children in a shared iCloud family account.

If you’re an adult, this tool doesn’t apply to you, and nothing is changing with your messages. The new Messages safety tools are opt-in only. Upgrading your device to iOS 15 will not automatically enable the new Messages protections for children.

How does Communications Safety for Children in Messages work?

The Messages app uses on-device machine learning to warn about explicit content. This means Apple will not have access to private communications. If a child sends or receives an explicit image, parents and the child will receive a notification. The alert does not notify Apple or law enforcement.

These notifications only apply to children 12 or younger in a family iCloud account. Parents cannot receive notifications for a child older than 13; those 13 or older can receive the content warnings if they choose.

It is important to note that this feature applies to the Messages application and not the Messages service. Messages’ end-to-end encryption is not going away; only you and your message’s recipients can read your messages. The required image processing and analysis occurs on the device, not a server.

What are the enhanced protections with Child Sexual Abuse Material in iCloud?

Child Sexual Abuse Material (CSAM) is a term used by the National Center for Missing and Exploited Children (NCMEC). It refers to any content that depicts sexually explicit activities involving a child.

Apple is implementing CSAM detection for any device using iCloud Photo Library. The detection is automatic and required if the device syncs to iCloud. The technology enables Apple to report instances of high detection to NCMEC.

How does CSAM detection in iCloud work?

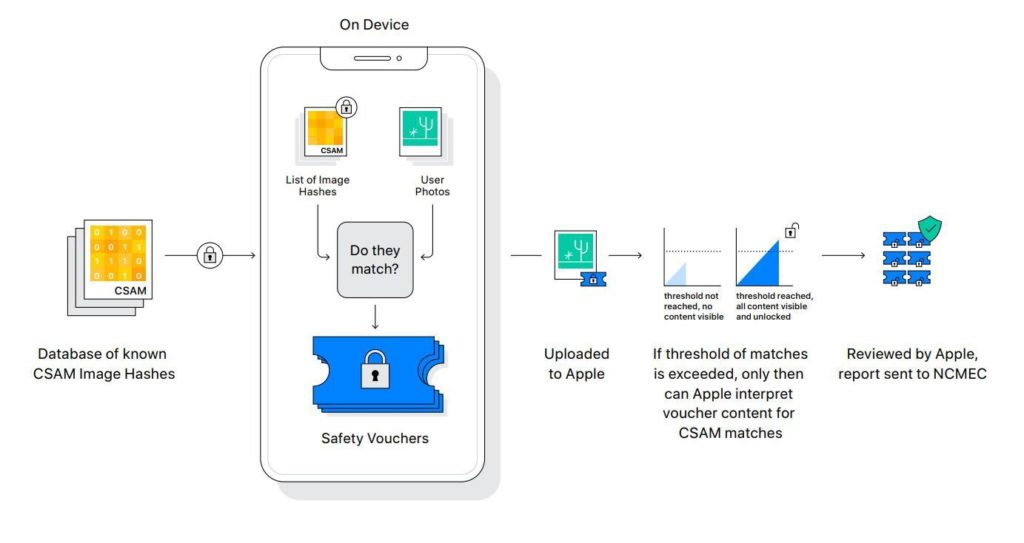

CSAM detection is both the most commonly misunderstood and most controversial aspect of Apple’s new tools. It’s also the most technical. We’ll include a link to a technical explanation below for those interested in the details. The most straightforward description is that each photo in your iCloud Photo library is “fingerprinted” with what is called a “hash.” A hash is a unique, obfuscated number protected by two layers of encryption. An iOS device uses on-device processing to match each hash against a database of other hashes tied to images of child pornography. If an iOS device exceeds a threshold of matched hashes, Apple manually reviews each report to confirm the match. If it is confirmed, Apple disables the user’s iCloud account and sends a report to NCMEC. A user can file an appeal to reinstate their account if they believe Apple made a mistake.

How accurate is Apple’s hash matching? How likely is it that Apple would make a mistake?

According to Apple, the chances their systems would mistakenly flag an iCloud account are less than one in one trillion per year. Also, Apple conducts a manual, human review before making a report to NCMEC if an iCloud account exceeds the predetermined threshold.

Can I read a more technical explanation of Apple’s CSAM detection?

Sure. The best explanation we’ve found is A Review of the Cryptography Behind the Apple PSI System by Benny Pinkas at Bar-Ilan University.

What are the changes Apple is making to Siri and Search?

Apple is updating Siri and Search in iOS and iPadOS to help children remain safe and get help with unsafe situations. Apple is updating Siri and Search “to intervene when users perform searches for queries related to CSAM. These interventions will explain to users that interest in this topic is harmful and problematic, and provide resources from partners to get help with this issue.”

Can I opt out of these initiatives?

It depends.

You are not automatically opted into the child communication protections in Messages. That program is opt-in only.

There is no way to opt out of the CSAM detection if you are using iCloud Photo Library. The closest equivalent to opting out, in this case, is to disable iCloud Photo Library.

Are Apple’s Privacy Policies changing?

No.

These changes and programs make me uncomfortable; what should I do?

We’d like to hear from you. We want to listen to your position about Apple’s child protection features, confirm that you understand the technology and discuss potential solutions. Contact us.

About arobasegroup

arobasegroup has been consulting with clients and advising the best use of Apple Technology since 1998. We listen to our customers and solve problems by addressing their specific, unique needs; we never rely on a one-size-fits-all solution or require them to use a specific product. arobasegroup is your advocate in all things related to information technology. Contact us to learn how we can help: info@arobasegroup.com.

Keep Up-to-Date: An Invitation

Keep on top of all the latest Apple-related news via our social media feed. When you follow us on our social media channels, you will always be up-to-date with the most relevant Apple news and have easy access to tips and useful articles relevant for Apple, iPhone, iPad and Apple Watch users. You won’t want to miss these articles and suggestions. Please follow arobasegroup on LinkedIn by tapping here. Thank you!